A

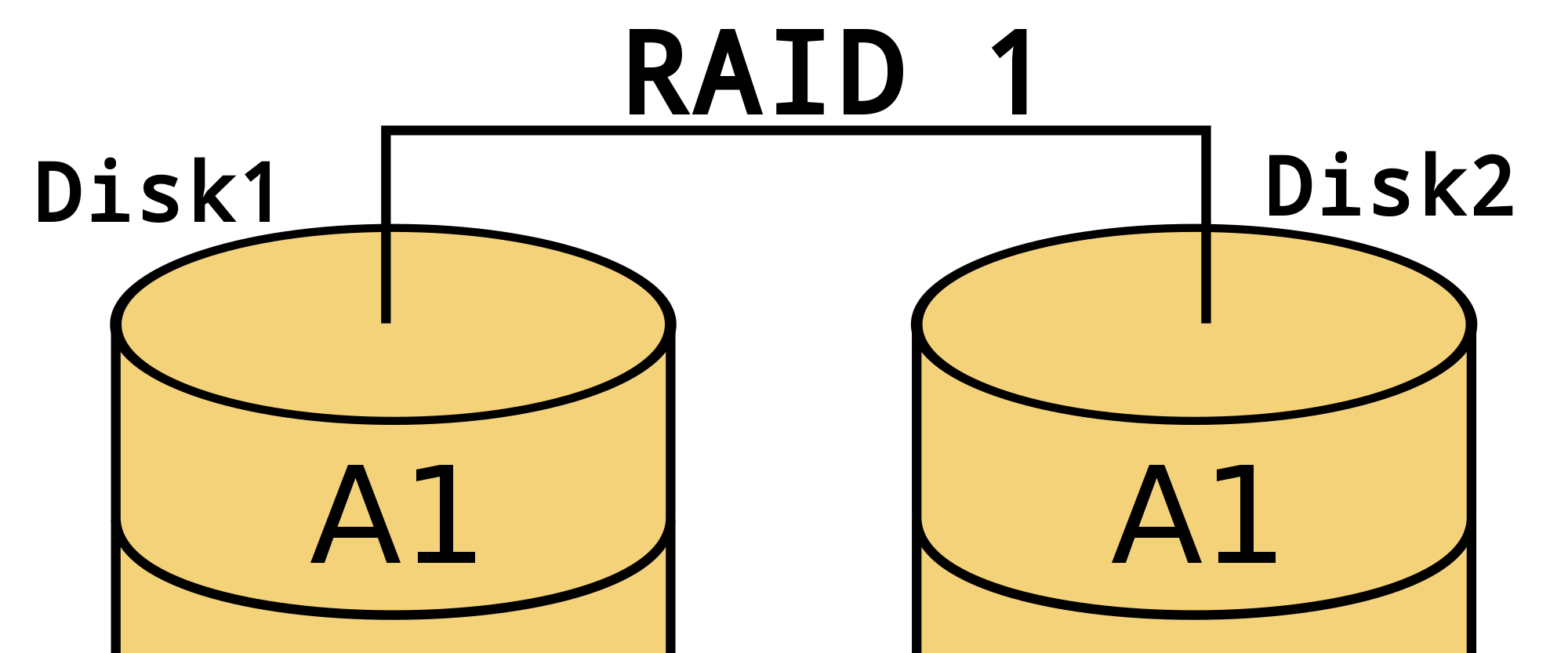

btrfs raid1 means mirroring all data and meta data on file system level. If you ever found some of your data lost after a btrfs scrub, you might know this output:

scrub status for <UUID>

scrub started at Thu Dec 25 15:19:22 2014 and was aborted after 89882 seconds

total bytes scrubbed: 1.87TiB with 4 errors

error details: csum=4

corrected errors: 0, uncorrectable errors: 4, unverified errors: 0

If you happen to have free space on a spare disk left, you can circumvent these problems via a

btrfs raid1 on filesystem level. The following article will help you migrate.

Why use btrfs instead of mdadm for raid1?

Well, there are some advantages (and disadvantages). First, you will gain a check on file system level which is easier to compute, because meta data and data are mirrored. It might take some more CPU to do these checks, but you will have the abillity to tell which of the copies is still healthy. On the other hand btrfs raid1 volumes are detected by your initrd automatically. Both partitions (or disks) will be mounted as one single volume without any additional configuration. Last, it is really easy to set up. Some of the disadvantages are: Higher CPU usage for checking (scrubbing), and you need to create the check jobs manually (

btrfs scrub ).

Prepare your disk or btrfs partition for raid1

If you are going to use a

btrfs raid1, both partitions should have the same size. This means, you need to align on the smallest of your disks. The easiest way to find out the correct number of sectors for this is using fdisk:

[server] [~] # fdisk -l /dev/sdb

Disk /dev/sdb: 698.7 GiB, 750156374016 bytes, 1465149168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x000325ff

Device Boot Start End Sectors Size Id Type

/dev/sdb2 2048 1465147391 1465145344 698.7G 83 Linux

In row 10 you can see the number of sectors. This is the target size. Write it down and create a new partition

/dev/sdc1 (in my case). Using the command

sudo cfdisk /dev/sdc you can create the new partition via a text user interface. As the new partition size I entered

1465145344S eingegeben. Please note the trailing

S! You don't need to format this partition using

mkfs.btrfs . But you can use

sudo partprobe to make your kernel re-read the partition table.

Mount the partitions

To mount the existing partition (containing the data), use this command.

sudo mount /dev/sdb2 /media/nextcloud

The next step is to add the newly created partition to the existing mountpoint. The corresponding btrfs command is:

btrfs device add /dev/sdc1 /media/nextcloud

Creating the btrfs raid1

But we are not done yet! At this point, we created a JBOD (»Just a bunch of disks«), which means a single big file system. In my case about 1200 GiB. To convert this volume into a functional raid1 you can use two super easy commands. You could combine them into a single command, but I wouldn’t recommend to do so, because it is slower to copy both data and metadata at once.

Convert metadata to raid1

The first step converting the disk array is to convert the metadata to raid1.

btrfs fi balance start -mconvert=raid1,soft /media/nextcloud/

Explanation:

- fi balance (short for filesystem balance ) is a btrfs command, which will let you select a new profile for you data.

- -m is the argument to work with metadata.

- -mconvert is the argument to convert the metadata to a new profile. This profile will be applied to all the disks in the array.

- raid1,soft means we chose the profile btrfs raid1. soft will select only data which has not been converted already. But if this is your first time running this command, it will catch all data anyway.

Convert data to raid1

In the second step all data is mirrored to the other disk.

btrfs fi balance start -dconvert=raid1,soft /media/nextcloud/

This time the

-dconvert= means to apply the new profile to all data on the disk array. This will take a little longer, depending on how much data you already have on your disk. If you want to watch the progress in another shell, you can type this command:

btrfs filesystem balance status /media/nextcloud

Final test

Disk usage and single chunks

After applying the new profile on your btrfs raid1 file system, there may still be single chunks left which are located on only one physical disk. Usually these consume zero bytes internally, because all the data has been converted. You can find out using this command:

btrfs fi usage /mnt/btrfs

…

Data,single: Size: ~ GiB, Used: ~GiB

/dev/sdc1 1.00GiB

…

You can get rid of those empty (but space allocating) chunks by using yet another btrfs command.

btrfs balance start -dusage=0 -musage=0 /mnt/btrfs

The above command wil run a balancing on the volume and clean up any chunks not having allocated any data.

Mount options

Both disks are mounted to the btrfs volume automatically. If you want to make really sure this happens properly, or your initrd does not support

btrfs detect, you can still use mount options for this matter. But you need to type in all devices which are used in the array. In this example I will use device names in my

/etc/fstab. The better option would be to use UUIDs for this matter.

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

# …

# /dev/sdb2 (nextcloud)

UUID=4466888b-629a-439e-9f91-809b7f5fd309 /media/nextcloud btrfs device=/dev/sdb2,device=/dev/sdb2,defaults,rw,user,nofail 0 2

You can list the needed UUIDs (optional) using this command:

ls -lF /dev/disk/by-uuid/ | grep sd[bc]

Check your new volume

If you want to make really sure everything went well, you can check which devices belong to a volume:

[server] [~] # btrfs fi show /media/nextcloud/

Label: 'Externe Festplatten RAID1' uuid: 4466888b-629a-439e-9f91-809b7f5fd309

Total devices 2 FS bytes used 120.10GiB

devid 1 size 698.64GiB used 123.03GiB path /dev/sdb2

devid 2 size 698.64GiB used 123.03GiB path /dev/sdc1

You can see two devices (or partitions) which are about 700 GiB of size each. Both mirror about 120 GiB of data, but including metadata about 123 GiB is actually used on each physical node. You can see the exact sizes using

btrfs fi usage :

[server] [~] # btrfs fi usage /media/nextcloud/

Overall:

Device size: 1.36TiB

Device allocated: 246.06GiB

Device unallocated: 1.12TiB

Device missing: 0.00B

Used: 240.21GiB

Free (estimated): 577.68GiB (min: 577.68GiB)

Data ratio: 2.00

Metadata ratio: 2.00

Global reserve: 64.00MiB (used: 0.00B)

Data,RAID1: Size:122.00GiB, Used:119.92GiB

/dev/sdb2 122.00GiB

/dev/sdc1 122.00GiB

Metadata,RAID1: Size:1.00GiB, Used:189.17MiB

/dev/sdb2 1.00GiB

/dev/sdc1 1.00GiB

System,RAID1: Size:32.00MiB, Used:48.00KiB

/dev/sdb2 32.00MiB

/dev/sdc1 32.00MiB

Unallocated:

/dev/sdb2 575.60GiB

/dev/sdc1 575.60GiB

As you can see, the 123 GiB split up into data, metadata and system.

Maintenance using btrfs scrub

Now to activating the real advantages:

btrfs scrub . If you happen to have incorrect data on one of the disk, you want to btrfs notice. For this you use

btrfs scrub, which of course will work properly on a btrfs raid1. It will detect faulty data and/or metadata and will overwrite it using the correct data from the still healthy raid member. You can run the command via cron or systemd-timer. I chose systemd-timer, but did not have a specific reason to chose it over cron.

Install systemd-unit and timer

You can find the original sources I used in this gist on github:

https://gist.github.com/gbrks/11b9d68d19394a265d70. But I needed to change some parts, just read on. You should place the files at these locations:

- /usr/local/bin/btrfs-scrub

- /etc/systemd/system/btrfs-scrub.service

- /etc/systemd/system/btrfs-scrub.timer

Please do replace one thing: Inside the service file replace Type=simple with

Type=oneshot because the process will terminate (exist) after scrubbing.

Activate the btrfs raid1 scrub service

The timer and service need to be enabled to actually execute. The commands for systemd are:

sudo systemctl daemon-reload

sudo systemctl enable btrfs-scrub.service

sudo systemctl enable btrfs-scrub.timer

sudo systemctl start btrfs-scrub.timer

The first command will tell systemd to re-read its configuration files. After that, the actual services are being enabled and the timer is activated. The timer has to be started at least once.

Configure email notifications

You can configure the email notification for your needs. I did not had a mailgun account and wanted to use my existing gmail account. This is possible using curl, netrc and only a few changes. The first thing you need to do is to create the file

/root/.netrc :

machine smtp.google.com

login user@gmail.com

password myapppw

If you don’t want to use your primary password (yes, you don’t!), you should

create an app password over here. If you use two factor authentication, this is a mandatory step. Now you can replace the gist from above

with my fork containing the documentation and changes to the original scrub job.

Error corrections sent by mail

In case actual errors are found on your btrfs raid1 volume, those will be corrected and mailed to you. If there were errors, the output might look as

documented in oracle’s blog over here.

$ btrfs scrub status /btrfs

scrub status for 15e213ad-4e2a-44f6-85d8-86d13e94099f

scrub started at Wed Sep 28 12:36:26 2011 and finished after 2 seconds

total bytes scrubbed: 200.48MB with 13 errors

error details: csum=13

corrected errors: 13, uncorrectable errors: 0, unverified

$ dmesg | tail

btrfs: fixed up at 1103101952

btrfs: fixed up at 1103106048

btrfs: fixed up at 1103110144

btrfs: fixed up at 1103114240

btrfs: fixed up at 1103118336

btrfs: fixed up at 1103122432

btrfs: fixed up at 1103126528

btrfs: fixed up at 1103130624

btrfs: fixed up at 1103134720

btrfs: fixed up at 1103138816

As you can see, the errors are shown as corrected. Yay!

\o/Conclusion

Using btrfs you get a pretty nice btrfs raid1 implementation on your file system layer. It is set up quite fast. Disadvantages are a little to high cpu load and manually installing all the maintenance scripts for executing btrfs scrub on your btrfs raid1.